Guo C, Rana M, Cisse M, et al. Countering adversarial images using input transformations[J]. arXiv preprint arXiv:1711.00117, 2017.

1. Overview

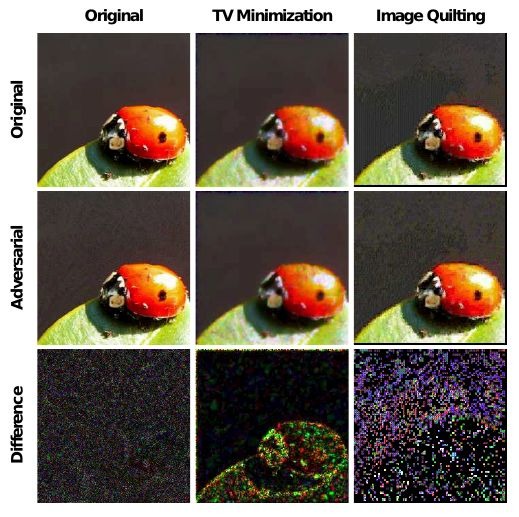

In this paper, it investigates strategies that defend against adversarial-example attacks on image-classification system by transformation

- bit-depth reduction

- JPEG compression

- total variance minimization

- image quilting

- total variance minimization and image quilting are very effective defenses

1.1. Related Works

1.1.1. Model-Specific Strategy

- enforce model properties. invariance and smoothness

1.1.2. Model-Agnostic

- remove adversarial perturbation from input

- JPEG compression

- image re-scaling

DeepFool

Carlini-Wagner’s L2 attack

1.2. Type of Attack

- Model A. trained on clean image

- Model B. trained on transformed image

- gray-box. adversarial(Model A) + transform + Model A

- black-box. adversarial(Model A) + transform + Model B

2. Defence

2.1. Image Cropping-Rescaling

- alter the spatial positioning of adversarial perturbation

- crop and rescale images at training time

- average prediction over random image crops

2.2. Bit-Depth Reduction

- simple quantization to remove small perturbation

- perform compression at quality level 75

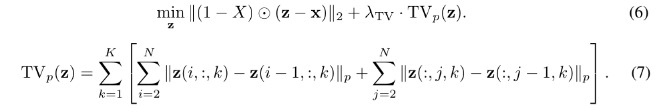

2.3. Total Variance Minimization

combine with pixel dropout

select random set of pixel by Bernoulli random variable. maintain pixel

use total variance minimization to construct image z

2.4. Image Quilting

- synthesize images by piecing together small patches that are taken from a database of image patches

3. Experiments

3.1. Setting

- pixel dropout rate = 0.5

- λ_{TV} = 0.03

- quilting patch ize 5x5

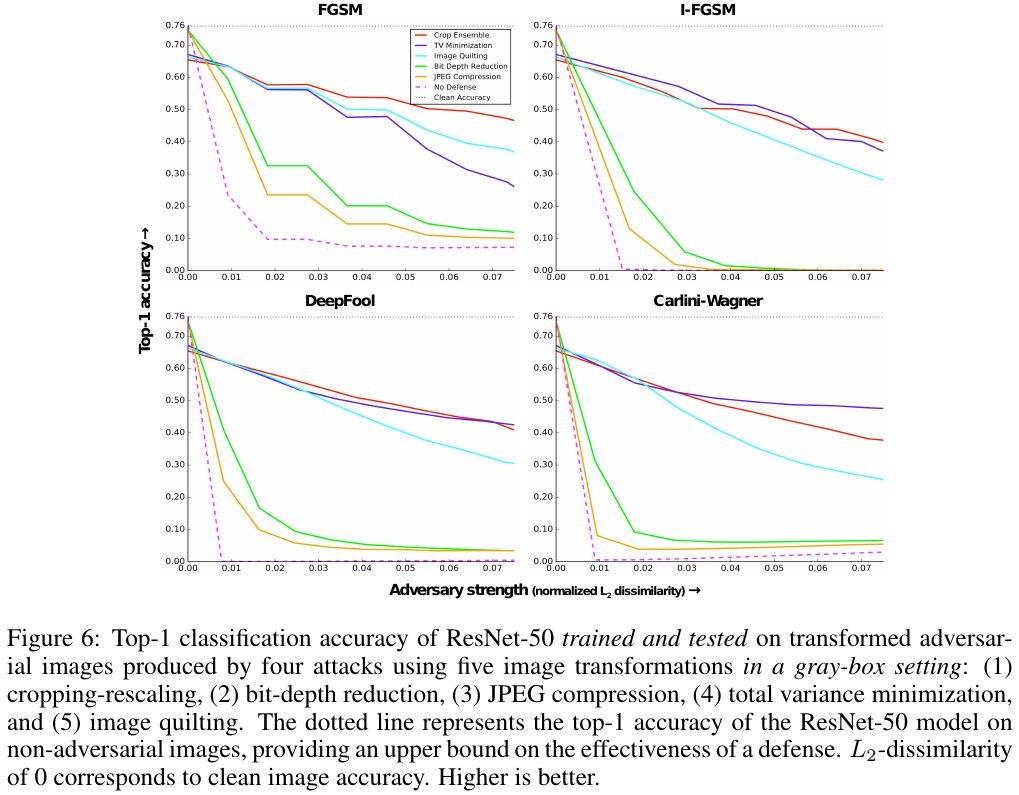

3.2. Gray-Box

- TV and quilting successful defend against adversarial examples from all four attacks

- quilting severely impact the model’s accuracy on non-adversarial images

3.2.1. Transformation at Training and Testing

- bit-depth reduction and JPEG compression are weak defenses

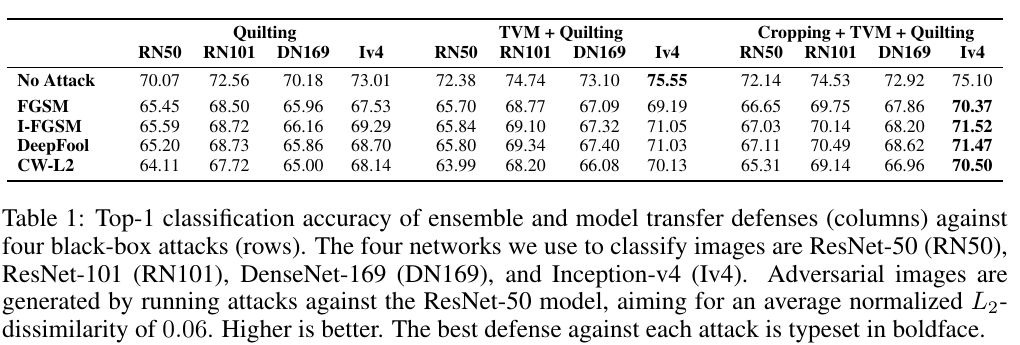

3.3. Black-Box

3.3.1. Ensemble and Model Transfer

- gain 1~2% in classification accuracy by ensemble

4. Discussion

- randomness is particularly crucial in developing strong defenses

- TV and quilting are stronger defenses than deterministic denoising procedures (bit-depth, JPEG, non-local mean)

- adversarial traning focuses on a particular attack, but transformation-based defenses generalize well